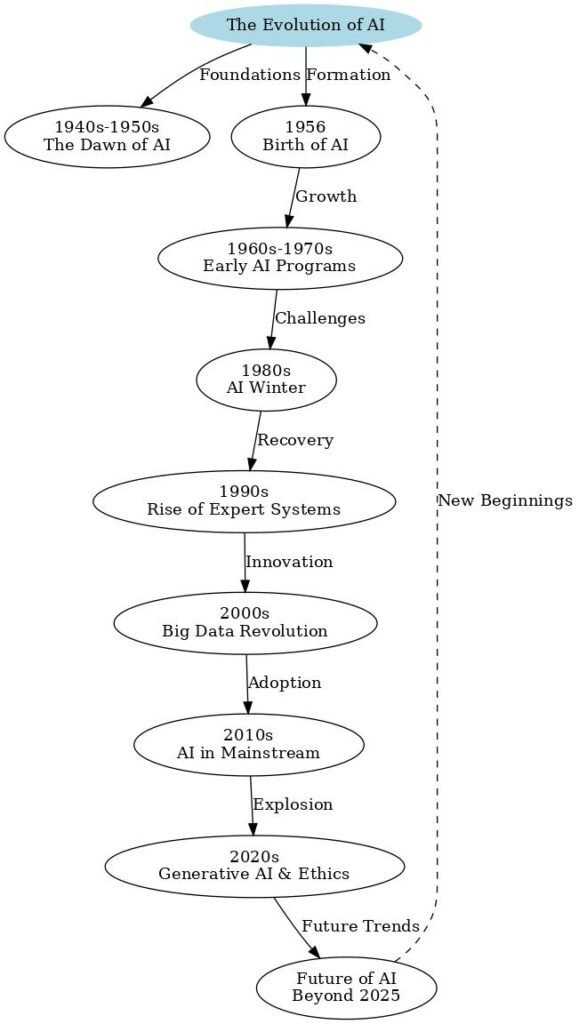

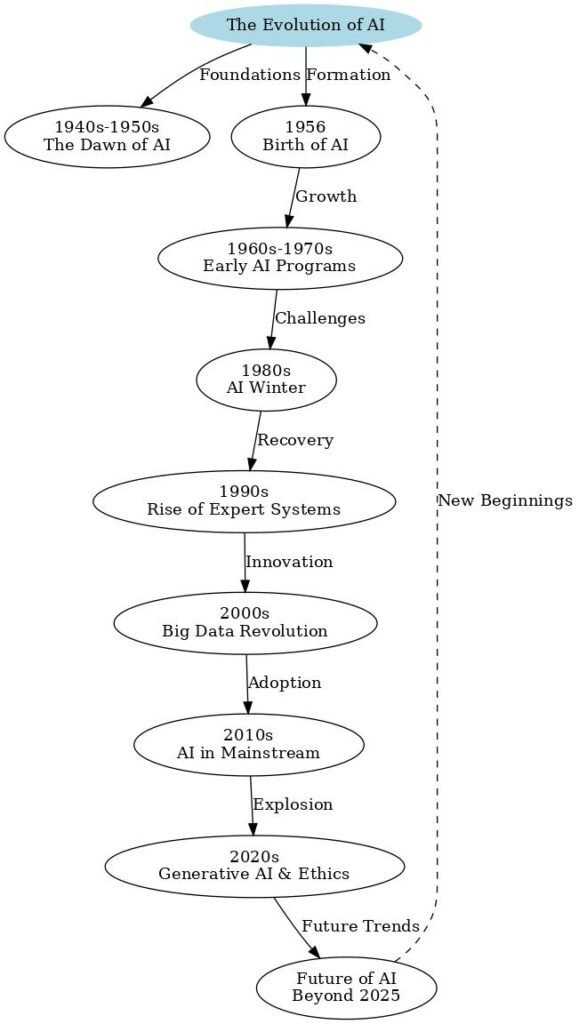

A Timeline of Artificial Intelligence:

From the foundations laid in the 1940s, through the breakthroughs of the 1956 Dartmouth Conference, AI winters, the rise of big data in the 2000s, to the explosion of generative AI in the 2020s and the promising future of AGI and human-AI collaboration beyond 2025

Here’s the AI history chart broken down up to 2024 with key developments and milestones:

| Era | Period | Key Developments | Milestones |

|---|---|---|---|

| Early Foundations | 1940s–1950s | – Conceptual beginnings of AI through logic and neural networks. | – 1943: McCulloch & Pitts model neural networks. |

| – Birth of AI as a field. | – 1950: Alan Turing proposes the Turing Test. | ||

| – 1956: John McCarthy coins the term “Artificial Intelligence” at Dartmouth. | |||

| Symbolic AI Era | 1956–1970s | – Early optimism with symbolic AI systems solving basic problems. | – Programs like the Logic Theorist and General Problem Solver emerge. |

| – First chatbot created. | – 1966: ELIZA, a natural language program, developed. | ||

| AI Winter | 1970s–1980s | – Funding cuts due to unmet expectations and system limitations. | – Governments reduce support for AI research. |

| Expert Systems Revival | 1980s–1990s | – Rise of rule-based expert systems applied in industries like healthcare and finance. | – 1981: Japan launches the Fifth Generation Computer Project. |

| – 1987: Expert systems’ decline due to maintenance costs and rigidity. | |||

| Machine Learning Revolution | 1990s–2000s | – Shift from rule-based systems to data-driven learning using algorithms. | – 1997: IBM’s Deep Blue defeats world chess champion Garry Kasparov. |

| – Internet growth enables collection of vast datasets. | |||

| Deep Learning Era | 2010s | – Explosion of Deep Learning (multi-layered neural networks) fueled by big data and GPUs. | – 2012: AlexNet revolutionizes image recognition. |

| – AI outperforms humans in specific games and tasks. | – 2016: Google DeepMind’s AlphaGo defeats Lee Sedol in Go. | ||

| – Natural Language Processing (NLP) advancements emerge. | – 2018: OpenAI’s GPT improves conversational AI capabilities. | ||

| AI Integration and Scaling | 2020–2024 | – AI adoption expands across all industries (healthcare, finance, transportation, content creation). | – 2020: GPT-3 released with impressive language generation abilities. |

| – LLMs (Large Language Models) dominate AI research and applications. | – 2023–2024: GPT-4 and other advanced models push human-like reasoning. | ||

| – Focus on AI ethics, regulations, and potential for Artificial General Intelligence (AGI). | – Ongoing: AI regulations debated globally to ensure ethical deployment. |

Below, we will explore each of these key stages in greater detail, diving deeper into the innovations, challenges, and milestones that have defined the history of AI.

Early Foundations (1940s–1950s)

AI didn’t start with Siri or ChatGPT—it began as a dream in the minds of visionary scientists who dared to ask: Can machines think like humans?

1.1 Theoretical Concepts: Laying the Groundwork

The story of Artificial Intelligence begins with the foundational question: Can machines simulate human thought? While AI as a term was not yet coined, the roots were planted in the fields of mathematics, philosophy, and logic. Visionaries believed that machines could be built to mimic human reasoning—a radical idea for its time.

The concepts of automation and intelligent machines date back to mythology and early automatons, like the mechanical birds of ancient Greece and Leonardo da Vinci’s sketches of robotic knights. However, it wasn’t until the 20th century that real progress began.

1.2 Alan Turing: The Father of Artificial Intelligence

Alan Turing, the renowned British mathematician, played a pivotal role in AI’s early history. During the 1940s, Turing conceptualized the idea that machines could perform any task, provided they were given the correct instructions—a groundbreaking shift in thought.

The Turing Test (1950): Turing introduced a method to determine whether a machine could exhibit behavior indistinguishable from a human. In his paper, “Computing Machinery and Intelligence,” he proposed the famous question: “Can machines think?”

- This idea gave rise to the Turing Test, which remains a benchmark for measuring machine intelligence.

- The test involves a human interacting with both a machine and another human through text. If the human cannot reliably identify the machine, it is considered to possess “artificial intelligence.”

Turing’s work laid the philosophical and theoretical groundwork for AI. He foresaw machines becoming smarter over time, a vision that we now see realized with modern advancements.

“Instead of trying to produce a program to simulate the adult mind, why not rather try to produce one which simulates the child’s?” – Alan Turing

1.3 Early Neural Networks: McCulloch-Pitts Model

In 1943, two scientists—Warren McCulloch and Walter Pitts—published a paper titled “A Logical Calculus of the Ideas Immanent in Nervous Activity.” This work was revolutionary because it introduced the concept of artificial neurons.

Key Contributions:

- They modeled the human brain’s functioning through a simple binary system, where each neuron could be “on” or “off.”

- Their neural network was the first step toward building machines that could “learn.”

This early work hinted at machine learning decades before the term became mainstream. Though rudimentary, it sparked the idea that machines could simulate the learning process of the brain.

1.4 The Von Neumann Architecture (1945)

John von Neumann, one of the greatest minds in computer science, introduced the architecture for modern computers, which became critical to AI development. His concept of stored programs allowed machines to execute instructions step-by-step, a precursor to AI algorithms.

- Von Neumann’s architecture allowed computers to process instructions in a logical order, similar to how humans follow step-by-step reasoning.

- Without this innovation, modern AI models and machine learning frameworks would not exist.

Impact: Von Neumann’s work enabled the creation of programmable machines, which eventually formed the backbone of AI development.

1.5 First AI Programs and Early Computers (Late 1940s–1950s)

As theoretical foundations matured, real computers began to take shape. Innovations in hardware allowed researchers to explore the potential of machine intelligence.

- ENIAC (1945): The Electronic Numerical Integrator and Computer, the first programmable digital computer, showcased the power of automation in solving calculations.

- The Logic Theorist (1955): Created by Allen Newell and Herbert Simon, this program is widely regarded as the first AI program. It could prove mathematical theorems, demonstrating that machines could simulate logical reasoning.

The success of such programs demonstrated that machines were no longer limited to basic calculations—they could simulate aspects of human cognition.

1.6 The Visionaries and the AI Dream

The 1940s–1950s laid the philosophical and technological foundations for AI. Key figures like Alan Turing, John von Neumann, and McCulloch-Pitts dared to dream of machines that could think and reason.

These pioneers faced skepticism but persevered because they believed in the potential of intelligent machines to transform human life.

- “Could the world we live in today, powered by smart devices and AI tools, have existed without these visionaries?”

Laying the Foundations for the AI Revolution

By the end of the 1950s, the world was on the brink of something extraordinary. The seeds of AI had been planted in theoretical mathematics, philosophy, and the earliest forms of neural networks. Although progress was slow, the dream of creating machines that could think like humans was alive.

“If you think AI’s early days are fascinating, wait until you see what happened next in the 1960s and beyond. Keep reading to uncover the incredible breakthroughs and setbacks that shaped the AI revolution!”

The Birth of AI as a Field (1956)

“One summer, a small group of scientists gathered to answer a profound question: Can machines be made to think? That summer marked the official birth of AI as a field.”

2.1 The Dartmouth Conference: Where It All Began

The year 1956 is etched in history as the official birth of Artificial Intelligence as a recognized field of study. This milestone began with the Dartmouth Conference, organized by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon.

The Proposal: McCarthy, a visionary computer scientist, proposed the idea of organizing a summer workshop at Dartmouth College. His famous pitch:

“The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.”

The goal of the conference was to bring together leading minds to explore the possibilities of building machines capable of simulating human intelligence.

Why It Was Important:

- It was the first formal effort to bring AI researchers together under one roof.

- The term “Artificial Intelligence” was coined by John McCarthy during this event.

- The conference laid the foundation for decades of research, sparking unprecedented enthusiasm and investment in AI.

2.2 The Visionaries Behind the Conference

The Dartmouth Conference attracted some of the most brilliant minds of the time:

John McCarthy:

- Known as the father of AI, McCarthy not only coined the term but also invented LISP, the programming language used to develop AI applications for decades.

- His pioneering vision helped formalize AI as an interdisciplinary field.

Marvin Minsky:

- A cognitive scientist and mathematician, Minsky became a key figure in AI development. He believed machines could replicate human reasoning and worked on early neural networks.

- Later, he co-founded the MIT AI Lab, a hub for groundbreaking AI research.

Claude Shannon:

- Known as the father of information theory, Shannon contributed his expertise in logic and computation, laying the groundwork for communication and learning systems in AI.

Nathaniel Rochester:

- A key figure in designing the IBM 701, Rochester brought practical experience with computer programming and systems to the table.

These pioneers believed that AI could progress rapidly with collaborative efforts—an optimism that drove AI’s early years.

2.3 Early Optimism: AI as the Future

The Dartmouth Conference sparked an era of incredible optimism. Researchers believed that human-level AI was just around the corner. Early successes fueled this confidence:

The Logic Theorist (1955): Built by Allen Newell and Herbert Simon, this program successfully proved mathematical theorems, showing machines could emulate logical reasoning.

Samuel’s Checkers Program (1959): Arthur Samuel developed a program that could learn to play checkers and improve its performance over time. It became one of the first examples of machine learning in action.

These early achievements made headlines and attracted significant funding. Researchers boldly predicted that machines would surpass human intelligence within a few decades.

2.4 The Role of Programming Languages: The Creation of LISP

AI development required tools to program machines efficiently. In 1958, John McCarthy developed LISP (LISt Processing), a programming language specifically designed for AI applications.

Why LISP Was Revolutionary:

- LISP supported symbolic processing, which was critical for developing systems that could mimic human reasoning.

- It enabled researchers to write programs for problem-solving, natural language processing, and early machine learning models.

Impact on AI:

- LISP became the de facto programming language for AI for several decades.

- It facilitated research into symbolic reasoning and complex algorithms.

2.5 Key Challenges During the Early Phase

Despite the optimism, the early AI pioneers faced significant challenges:

Limited Computational Power:

- Computers of the 1950s were slow, expensive, and had limited memory. Researchers had grand ideas, but hardware constraints stalled progress.

Lack of Data:

- AI systems require large datasets to learn effectively. In the 1950s, access to digitized data was minimal.

Complexity of Human Intelligence:

- The assumption that replicating human reasoning would be straightforward proved overly optimistic. Human intelligence was far more complex than initially believed.

Funding Dependencies:

- Early funding came primarily from government agencies. Although investments were significant, unrealistic expectations sometimes led to frustration among stakeholders.

“If you were building AI in the 1950s with no modern computers or big data, how far do you think you could go?”

2.6 Legacy of the Dartmouth Conference

The Dartmouth Conference established AI as a legitimate scientific field and introduced ideas that would influence decades of innovation. Although progress was slower than expected, the optimism of the 1950s was critical:

- It attracted funding and resources for AI research.

- It established key principles of AI, including symbolic reasoning and early machine learning.

- It fostered a collaborative, interdisciplinary approach that remains a hallmark of AI research today.

John McCarthy’s vision lived on, as researchers continued striving to answer the fundamental question: Can machines think?

A Dream Realized and the Journey Ahead

The Dartmouth Conference of 1956 marked the moment when AI transitioned from an idea to a formal scientific pursuit. Though the road ahead was filled with challenges, the optimism and groundbreaking work of the 1950s paved the way for the future.

“Curious to see how AI evolved after the optimism of the 1950s? Continue reading to explore the rise, fall, and rebirth of AI in the decades that followed!”

The Rise of AI (1960s–1970s)

“What happens when bold predictions meet the limits of technology? The 1960s and 1970s answered this question with a mix of early successes, frustrations, and crucial lessons that would shape AI for decades to come.”

3.1 The Golden Age of AI Research (1960s)

The 1960s marked the beginning of what is often referred to as the Golden Age of AI. Fueled by the optimism from the Dartmouth Conference and early successes, researchers made significant strides in developing machines capable of performing tasks that were once thought exclusive to humans.

Key Milestones:

- ELIZA (1966): Developed by Joseph Weizenbaum, ELIZA was a pioneering natural language processing program that could simulate conversation. ELIZA’s DOCTOR script mimicked a Rogerian psychotherapist, demonstrating that machines could hold basic, human-like conversations.

- Shakey the Robot (1966): Created by the Stanford Research Institute, Shakey was the first mobile robot to combine perception, reasoning, and action. Shakey could navigate its environment, making it a landmark achievement in the integration of AI and robotics.

- Computer Chess (1967): Researchers began developing AI systems that could play chess at a competitive level. Early programs like IBM’s Deep Thought showcased AI’s potential in strategic thinking and decision-making.

These advancements were celebrated as groundbreaking, and many researchers believed that achieving human-level intelligence was within reach. The 1960s were characterized by expanding the possibilities of what AI could do—albeit on a smaller, more limited scale.

3.2 The Shift from Symbolic AI to Heuristic Approaches

In the early days of AI, researchers primarily focused on symbolic AI—systems that represented knowledge in symbols and used logical reasoning to make decisions. However, the limitations of symbolic AI became evident in the late 1960s and early 1970s, particularly when trying to tackle more complex, real-world problems.

- The Problem of Knowledge Representation: Symbolic AI relied heavily on explicitly programmed rules and knowledge bases, which made it difficult for machines to adapt to new situations or deal with uncertainty.

Heuristic Search: As symbolic approaches struggled, researchers began to experiment with more flexible techniques, such as heuristic search. This method allowed AI systems to explore multiple potential solutions and learn from experience, rather than relying solely on predefined rules.

- Heuristic Search Algorithms: Programs like General Problem Solver (GPS) and game-playing algorithms evolved to use heuristics, which helped AI navigate complex decision trees more efficiently.

3.3 AI in Robotics: The Integration of Perception and Action

Robotics became an increasingly important field in AI during the 1960s and 1970s. Researchers focused on creating robots that could interact with the real world, requiring not only perception (the ability to see, hear, and touch) but also action (the ability to move and manipulate objects).

Notable Contributions:

- Shakey the Robot (1966): As mentioned earlier, Shakey’s development was one of the first attempts at combining perception, reasoning, and physical action. The robot could use its sensors to perceive its environment and plan a path to reach a goal.

- Stanford Cart (1970s): This autonomous vehicle, developed at Stanford University, could navigate through rooms by interpreting its environment using video input. It was one of the earliest mobile robots to demonstrate autonomous navigation.

These early robots showcased the power of AI in action, but they also revealed the technical challenges involved. Robotic AI systems struggled with real-time decision-making and environmental unpredictability, underscoring the complexity of designing machines that could operate in dynamic, real-world settings.

3.4 The Rise of Expert Systems (1970s)

In the 1970s, the field of AI saw the emergence of expert systems—software applications designed to mimic the decision-making abilities of human experts in specific domains. Expert systems became highly influential in practical AI applications.

What Is an Expert System?

- An expert system is a knowledge-based system that uses if-then rules to simulate the reasoning process of a human expert. These systems were used for tasks like medical diagnosis, legal reasoning, and even troubleshooting computer hardware.

- The MYCIN system (1972), developed at Stanford University, was an early expert system used to diagnose bacterial infections and recommend antibiotics. MYCIN was considered one of the most successful applications of AI at the time.

Why Expert Systems Were Important:

- Expert systems demonstrated the practical utility of AI. Unlike previous AI programs that were experimental, expert systems were used in real-world industries to make complex decisions.

- They bridged the gap between theoretical research and commercial applications of AI.

3.5 The AI Winter: Challenges and Setbacks

Despite the initial excitement and optimism of the 1960s and early 1970s, the field of AI began to face significant challenges. The ambitious predictions made by early researchers were not realized as quickly as expected, leading to a period of disappointment and reduced funding in the late 1970s and 1980s. This period is known as the AI Winter.

Reasons for the AI Winter:

- Overpromises and Underperformance: Many of the initial goals for AI, such as machines achieving human-level intelligence, were unrealistic given the technology available at the time.

- High Costs and Limited Progress: AI systems required expensive hardware and extensive human labor to build and maintain. The slow pace of tangible results led to a reduction in funding and enthusiasm.

- Lack of Scalability: Early AI systems, including expert systems, struggled to scale effectively and deal with the complexity of real-world knowledge.

Despite these setbacks, the 1970s laid the groundwork for future developments in AI. Expert systems would later be revived in the 1980s, and the development of machine learning and neural networks in the 1980s and 1990s would address many of the challenges faced during this period.

- “If AI is so powerful today, why do you think it took decades for us to get to this point? Was the AI Winter inevitable?”

The Rise and Fall of Early AI Dreams

The 1960s and 1970s were a time of both great achievement and disappointment in the field of AI. Researchers made significant strides in developing early AI systems, but the technological limitations and complexity of human intelligence led to frustrations that caused a slowdown in progress. Despite this, the vision of AI as a field of study persisted and would continue to grow, laying the foundation for the AI breakthroughs that followed.

“Want to learn more about how AI overcame its early setbacks and began to thrive in the 1980s and beyond? Keep reading as we dive into the era of machine learning and neural networks!”

AI and the Development of Machine Learning (1980s–1990s)

“As AI stumbled in its early years, a new wave of ideas emerged in the 1980s that would change the course of the field forever. Machine learning, a technique miming how humans learn, was born—ushering in a new era of possibilities for artificial intelligence.”

4.1 The Revival: The Rise of Neural Networks

By the 1980s, AI was undergoing a significant revival. The AI Winter of the 1970s had caused a significant slowdown in the field, but a fresh approach to artificial intelligence emerged. Neural networks, explored earlier in the 1950s and 1960s, found new relevance due to advancements in computing power and a new understanding of learning algorithms.

The Rediscovery of Neural Networks:

- Early neural networks, like the perceptron, had been limited by their inability to handle complex problems. However, backpropagation—a method for training multi-layer neural networks—was revived in the 1980s by researchers such as Geoffrey Hinton, David Rumelhart, and Ronald J. Williams.

- Backpropagation allowed for the training of deep networks by adjusting the weights of connections based on the error of the output. This breakthrough opened the door for more complex and capable neural network models.

Key Milestones:

- Multilayer Perceptrons (MLPs): Neural networks with multiple layers were able to solve problems that were previously unsolvable with simpler models.

- Deep Learning Foundations: The foundational principles of deep learning began to take shape, despite its slow adoption at the time due to limited computational power.

4.2 Expert Systems and the Surge of Knowledge-Based AI

While machine learning and neural networks were gaining ground, expert systems continued to dominate the practical applications of AI during the 1980s.

The Boom of Expert Systems:

- By the early 1980s, expert systems like XCON, developed by Digital Equipment Corporation (DEC), began to be implemented in real-world business applications. These systems were used for complex decision-making in fields such as medical diagnosis, financial planning, and engineering design.

- XCON, for instance, helped configure complex computer systems for customers, making it one of the first widely used expert systems in business.

Why Expert Systems Were Important:

- Expert systems used knowledge representation to simulate the decision-making of human experts, providing valuable assistance in domains that required specialized knowledge.

- However, despite their success, expert systems had limitations, such as the inability to handle uncertainty or learn from new data, which set the stage for machine learning approaches to shine.

4.3 The Emergence of Machine Learning as a Dominant Force

As AI researchers explored ways to move beyond expert systems, the field of machine learning (ML) began to emerge as a dominant force in AI development. The goal of machine learning was simple: build algorithms that allowed machines to learn from data and improve over time without being explicitly programmed.

Supervised Learning:

- A major breakthrough in machine learning came with the development of supervised learning algorithms. These algorithms allowed AI systems to be trained on labeled data, where the input data had corresponding output labels. This enabled AI systems to recognize patterns and make predictions based on new, unseen data.

Key Examples:

- Decision Trees, Support Vector Machines (SVMs), and k-Nearest Neighbors (k-NN) became popular techniques in supervised learning during the 1980s and 1990s.

Unsupervised Learning:

- Researchers also explored unsupervised learning, where the machine was given unlabeled data and tasked with finding hidden patterns on its own. This was particularly useful for tasks like clustering and dimensionality reduction.

The Impact of Data:

- One of the key drivers of this shift was the growing availability of large datasets and the increasing computational power of mainframe computers and supercomputers.

- Machine learning algorithms leveraged these resources to automatically uncover insights from data, moving away from the rigid, rule-based systems that dominated AI before.

4.4 AI in Commercial Applications: The Advent of AI in Business

During the 1990s, AI began to move beyond the academic and research labs and into commercial applications. As businesses sought ways to leverage technology for competitive advantage, AI solutions became integral to operations across industries.

AI in Finance:

- Algorithmic trading and credit scoring became two of the most prominent applications of AI in the finance sector. Machine learning algorithms began to play a role in predicting stock market trends and evaluating the risk associated with lending.

- The rise of data mining techniques also helped financial institutions identify hidden patterns in large volumes of data.

AI in Healthcare:

- Medical diagnosis systems were refined using neural networks and expert systems to assist doctors in diagnosing diseases such as cancer, heart disease, and diabetes.

- IBM’s Watson Health, though developed later, is a good example of AI in healthcare, utilizing machine learning and NLP to analyze patient data and suggest treatments.

AI in Manufacturing:

- In manufacturing, AI-powered robotics helped automate repetitive tasks, improve efficiency, and reduce costs. The rise of robotic process automation (RPA) led to the development of AI systems capable of executing tasks that were previously done by human workers, such as sorting, packaging, and assembly.

4.5 The Limitations of AI: Challenges and Roadblocks

While the 1980s and 1990s witnessed the blossoming of machine learning and commercial AI, challenges still persisted.

Lack of Data:

- Despite advancements, AI systems often suffered from the lack of sufficient data to train models effectively. Early machine learning algorithms needed large amounts of labeled data to function well, which was not always available in various industries.

Computational Limitations:

- Although AI research in the 1990s benefited from faster computers, processing power remained a bottleneck for many algorithms, particularly those requiring large-scale data analysis.

Overfitting and Underfitting:

- As AI systems became more complex, they often faced the problem of overfitting (where models became too tailored to the training data) or underfitting (where models failed to capture essential patterns in the data).

Engaging Question for Readers:

- “What do you think was the most crucial breakthrough in AI during the 1990s? Was it the rise of machine learning, or was it the commercial adoption of AI in business?”

Machine Learning’s Emergence as a Game Changer

The 1980s and 1990s marked a pivotal period in the history of AI. Neural networks, expert systems, and the early development of machine learning laid the groundwork for the AI revolution of the 21st century. Though the field faced challenges, the idea that machines could learn from data began to take hold, paving the way for more sophisticated systems in the decades that followed.

“Eager to see how AI evolved in the 21st century and how machine learning led to deep learning? Keep reading to uncover the exciting developments that shaped modern AI!”

AI in the 21st Century: The Rise of Deep Learning and Big Data (2000s–Present)

“The 21st century has been nothing short of a revolution for AI, as deep learning and big data have combined to fuel an unprecedented leap in AI capabilities. But how did we get here? Let’s explore the pivotal developments that transformed AI from a research curiosity to the backbone of modern technologies.”

5.1 The Deep Learning Breakthrough: A New Era for AI

In the early 2000s, deep learning—a subset of machine learning—began to gain traction. Though its roots can be traced back to the neural networks of the 1980s, advances in computational power, large-scale datasets, and novel algorithms ignited the deep learning revolution that would fundamentally change AI.

Why Deep Learning?:

- Deep learning involves using artificial neural networks with many layers (hence the term “deep”), enabling systems to learn more complex patterns from vast amounts of data.

- Unlike traditional machine learning, deep learning algorithms can perform automatic feature extraction, eliminating the need for human-engineered features and allowing them to tackle tasks previously considered impossible, such as image and speech recognition.

The Role of Big Data:

- As the world became increasingly digitized, the availability of massive datasets skyrocketed. Social media platforms, e-commerce websites, and sensors all generated enormous amounts of data—feeding deep learning algorithms.

- Big data played a critical role in training deep learning models, which required huge volumes of diverse data to accurately recognize patterns and make predictions.

Key Milestones in Deep Learning:

- 2006: Geoffrey Hinton and his team introduced the concept of deep belief networks (DBNs), laying the groundwork for modern deep learning.

- 2012: A breakthrough moment occurred when a deep neural network, AlexNet, won the ImageNet Large Scale Visual Recognition Challenge by a significant margin. The performance of AlexNet marked a turning point in computer vision and deep learning, demonstrating the power of large-scale deep neural networks.

5.2 The Explosion of AI in Everyday Life

With deep learning at the forefront, AI began to seep into everyday applications, transforming industries, businesses, and consumer experiences.

AI in Computer Vision:

- Computer vision is one of the most successful areas powered by deep learning. Algorithms now have the ability to understand and interpret images and videos, fueling applications in fields ranging from autonomous driving to medical imaging.

- Companies like Google, Microsoft, and Facebook leveraged deep learning to power facial recognition systems, object detection, and image classification at massive scales.

AI in Natural Language Processing (NLP):

- Advances in NLP, powered by deep learning, allowed machines to understand and generate human language. This paved the way for systems like chatbots, virtual assistants (e.g., Siri, Alexa), and automated translation services.

- In 2018, OpenAI released GPT-2, a highly sophisticated language model capable of generating coherent text, further cementing deep learning’s dominance in NLP.

AI in Healthcare:

- AI applications in healthcare accelerated, with deep learning enabling image recognition for diagnostics, personalized medicine, and predictive models for disease outbreaks.

- IBM Watson Health gained attention for its ability to analyze medical literature and patient data to recommend treatments for various cancers and other conditions.

5.3 The Role of GPUs and Hardware Innovation in AI Progress

The success of deep learning would not have been possible without the significant improvements in computing hardware.

Graphics Processing Units (GPUs):

- GPUs were originally designed for rendering graphics in video games, but researchers quickly realized their power in processing the massive amounts of data required by deep learning models.

- Unlike traditional CPUs, which process data sequentially, GPUs can perform many calculations simultaneously, making them ideal for deep learning tasks that require the processing of large datasets in parallel.

Google’s TPUs:

- In 2016, Google introduced Tensor Processing Units (TPUs), specialized hardware designed to accelerate machine learning tasks, particularly deep learning. TPUs, combined with Google Cloud, allowed AI researchers and companies to scale their machine learning models more efficiently.

Quantum Computing:

- While still in its infancy, quantum computing is seen as the next frontier for AI. Quantum computers could exponentially speed up the training of deep learning models, allowing AI systems to process even larger datasets in a fraction of the time. This could unlock new possibilities for fields like drug discovery, material science, and cryptography.

5.4 AI Becomes a Business Imperative

As AI technologies matured, they became essential for businesses across industries, transforming how companies interacted with customers, operated internally, and made decisions.

AI in E-commerce and Retail:

- AI-driven recommendation engines are now integral to platforms like Amazon and Netflix, helping customers discover products and media based on their preferences and behavior.

- Chatbots and virtual assistants powered by NLP also revolutionized customer service, providing instant responses and personalized recommendations.

AI in Finance:

- In finance, AI helped improve fraud detection, algorithmic trading, and credit risk assessment. Companies like PayPal, Square, and Goldman Sachs use machine learning models to identify patterns in transaction data and make faster, more accurate decisions.

AI in Marketing:

- AI-powered tools allow marketers to optimize campaigns, target the right audiences, and predict trends with remarkable accuracy. Personalized advertising became more efficient with AI algorithms analyzing user data and adjusting ads in real-time.

AI in Autonomous Vehicles:

- Companies like Tesla, Waymo, and Uber have poured significant resources into developing autonomous vehicles powered by AI. Deep learning models process vast amounts of data from sensors, cameras, and LiDAR systems to allow self-driving cars to navigate roads without human intervention.

5.5 The Ethical Debate: Challenges and Responsibilities of AI

As AI systems began to proliferate, so did concerns regarding their ethical implications. The more advanced these systems became, the more pressing the need for ethical guidelines in their development and deployment.

AI Bias:

- Bias in AI models is a critical concern, especially in areas like criminal justice and hiring practices, where algorithms may unintentionally perpetuate societal biases. For example, facial recognition algorithms have been shown to exhibit racial and gender biases.

AI and Job Displacement:

- While AI has created new job opportunities, there are concerns about job displacement as automation takes over routine and manual tasks. From manufacturing to customer service, AI has the potential to replace human workers in various industries.

The Need for Regulations:

- Governments and regulatory bodies are increasingly focusing on AI regulations to ensure that AI technologies are developed responsibly and transparently. The European Union introduced the Artificial Intelligence Act to set guidelines for high-risk AI applications, focusing on safety, transparency, and fairness.

5.6 The Future of AI: What’s Next?

As AI continues to evolve, the next frontier promises to bring even more innovation. AI researchers are looking towards general AI—machines that can perform any intellectual task a human can—along with new breakthroughs in AI creativity, emotional intelligence, and collaborative intelligence.

General AI vs. Narrow AI:

- While narrow AI is designed for specific tasks, general AI aims to replicate human-level cognition and decision-making across a variety of contexts.

AI in Creativity:

- AI is already being used in creative fields like art, music composition, and writing. The ability of AI to generate artistic works will only grow, leading to new forms of AI-human collaboration.

Human-AI Collaboration:

- The future of AI will likely be defined by collaborative intelligence, where AI systems work alongside humans, enhancing human capabilities rather than replacing them entirely.

“Want to stay ahead of the curve? Follow the developments in AI as we explore general AI, quantum computing, and AI creativity in the next few years. The future of AI is unfolding right before our eyes!”

The Transformation of AI in the 21st Century

The rise of deep learning, combined with big data and specialized hardware, has pushed AI into new heights of capability and application. From computer vision to natural language processing, AI now permeates many aspects of modern life, while its ethical and societal implications continue to spark critical discussions.

Hasnain Aslam is a seasoned finance blogger and digital marketing strategist with a strong expertise in SEO, content marketing, and business growth strategies. With years of experience helping entrepreneurs and businesses boost their online presence and maximize organic traffic, he specializes in crafting high-impact content that ranks on search engines and drives real results. His insights empower professionals to build sustainable digital success through strategic marketing and innovative SEO techniques.

Pingback: Top In-Demand Freelance Skills 2025 | Future-Proof Career

Pingback: Increase Website Traffic in 2025: 0 to 10K Visitors Guide